The New Era of AI: Exploring the Frontier of Quantized Large Language Models

In recent years, Large Language Models (LLMs) have undeniably taken the center stage in the realm of artificial intelligence and natural language processing, transforming how we interact with technology on a daily basis. From powering sophisticated chatbots to enhancing search engine capabilities and beyond, LLMs have become the backbone of numerous innovative applications, pushing the boundaries of what machines can understand and how they can communicate. This surge in prominence is not just a testament to their potential but also highlights a rapidly growing interest across the tech industry and academia alike.

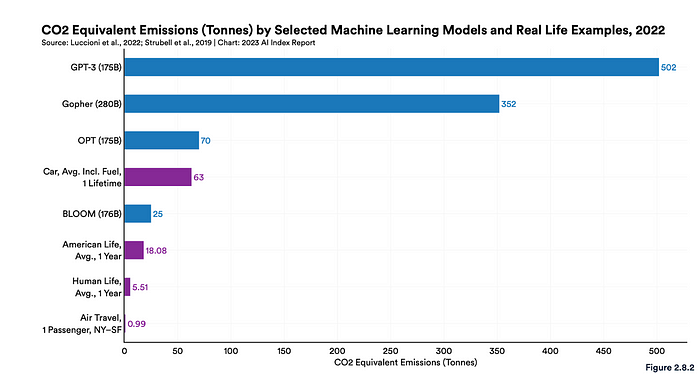

However, as we continue to scale these models to unprecedented levels, aiming for ever more nuanced understanding and generative capabilities, a critical challenge emerges: the immense computational resources required for training and deploying LLMs. The larger these models grow, the more pronounced the issue becomes, straining energy resources, escalating costs, and limiting accessibility to only the most well-funded organizations. It’s here that the concept of quantization comes into sharp focus, presenting a promising avenue for making LLMs more efficient, accessible, and sustainable.

Quantization, in essence, is the process of reducing the precision of the numbers that represent a model’s parameters, a technique that can significantly reduce the computational resources required to run these models without substantially compromising their performance. This is a critical area of research because it holds the key to democratizing access to state-of-the-art AI technologies, ensuring that the benefits of LLMs can be enjoyed more broadly across society. By optimizing how these models are stored and executed, quantization not only makes it feasible to deploy more advanced AI on a wider range of devices, including those with limited processing capabilities, but also contributes to the sustainability of AI technologies by reducing the energy consumption associated with their operation.

As we delve into the specifics of quantization techniques through the lens of recent research papers, it’s important to understand that this is not just a technical endeavor; it’s a crucial step towards a future where the transformative power of Large Language Models can be harnessed more responsibly and inclusively. The following exploration of cutting-edge quantization methodologies not only sheds light on the technical innovations driving this field forward but also underscores the broader implications for the accessibility and environmental footprint of AI technologies.

ZeRO: Memory Optimizations Toward Training Trillion Parameter Models

Let’s start with ZeRO: Memory Optimizations Toward Training Trillion Parameter Models. It addresses a significant challenge in training large deep learning models — specifically, the difficulty of fitting billion to trillion parameter models into limited device memory while maintaining computational and communication efficiency. The authors propose a novel solution called the Zero Redundancy Optimizer (ZeRO), which optimizes memory usage, significantly improving the speed of training large models and increasing the feasible model size that can be trained efficiently.

ZeRO tackles the issue by eliminating memory redundancies in data- and model-parallel training. This approach allows for the scaling of model size proportional to the number of devices used, without a corresponding increase in memory requirement per device. ZeRO achieves this by partitioning model states (optimizer states, gradients, and parameters) across data-parallel processes, thereby reducing the memory required on each device.

In essence, ZeRO combines the computation and communication efficiency of data parallelism with the memory efficiency of model parallelism. It implements this strategy through three optimization stages:

1. Optimizer State Partitioning (OSP): This reduces the memory required for optimizer states by partitioning them across data-parallel processes.

2. Gradient Partitioning (GP): This similarly partitions gradients, further reducing the memory footprint.

3. Parameter Partitioning (PP): This partitions model parameters, achieving a linear reduction in memory requirement with the increase in data-parallel processes.

Through these stages, ZeRO significantly reduces the memory required to train large models, facilitating the training of models with over a hundred billion parameters on current hardware platforms and setting the stage for training trillion-parameter models. The implementation and evaluation of ZeRO demonstrated its ability to train extremely large models with super-linear speedup, democratizing access to large model training by reducing the need for extensive model parallelism, which is more complex and less accessible for many researchers.

LoRA: Low-Rank Adaptation of Large Language Models

Building upon the insights from our first paper on ZeRO, we turn our focus to LoRA: Low-Rank Adaptation of Large Language Models, which marks a significant stride in optimizing the efficiency and flexibility of adapting pre-trained language models for downstream tasks. This paper proposes an innovative method that mitigates the computational burdens associated with traditional fine-tuning approaches, particularly when scaling to models with hundreds of billions of parameters, such as GPT-3.

LoRA introduces a clever technique that works somewhat like a precision tool, modifying the pre-trained weights of a model in a subtle yet impactful way. Instead of the traditional route of fine-tuning, where every weight of the model might be adjusted — a process that’s resource-intensive and sometimes impractical — LoRA employs a pair of smaller, trainable matrices. Think of these matrices as keys that unlock the potential of the pre-trained model, tweaking its knowledge to suit specific tasks without the need to rewrite the entire codebase.

What makes LoRA truly stand out is its efficiency. By focusing on these smaller matrices, LoRA significantly reduces the computational burden, making it feasible to train and adapt models on a much larger scale than previously possible. To put it in perspective, adapting a behemoth like GPT-3 with LoRA can cut down the memory requirements from an eye-watering 1.2 terabytes to a far more manageable 350 gigabytes for training purposes. Moreover, the size of the model checkpoints, essentially the saved states of the model, is dramatically reduced from 350 gigabytes to approximately 35 megabytes depending on the configuration. This isn’t just a minor improvement; it’s a transformative change that opens up new possibilities for deploying state-of-the-art models in a variety of settings.

Furthermore, LoRA’s method does not introduce additional inference latency, maintaining the model’s responsiveness and making it an attractive option for real-time applications. Through empirical experiments, LoRA has been validated to retain or even surpass the performance levels of traditional fine-tuning methods across a range of NLP tasks, proving that it’s possible to achieve top-tier results without the hefty computational price tag. By bridging the gap between the potential of large language models and the practical limitations of training and deploying them, LoRA offers a pathway to more sustainable, efficient, and accessible AI technologies. This not only democratizes access to cutting-edge models but also encourages a more thoughtful approach to AI development, where innovation is matched with an eye towards environmental and resource constraints.

BitNet: Scaling 1-bit Transformers for Large Language Models

The exploration of BitNet: Scaling 1-bit Transformers for Large Language Models unveils a pioneering approach aimed at drastically enhancing the efficiency and scalability of Large Language Models (LLMs) through the adoption of 1-bit quantization. By employing a technique termed BitLinear, BitNet innovatively processes weights in binary form, significantly condensing model size and computational demands. This method, which meticulously adjusts weights to a zero mean before quantization and incorporates a scaling factor to reconcile the binarized and original weights, offers a groundbreaking reduction in computational complexity. Consequently, BitNet paves the way for the deployment of sophisticated LLMs across a diverse array of hardware platforms, democratizing access to cutting-edge AI technologies.

The BitNet architecture introduces several technical innovations to optimize training and enhance model performance. It utilizes Group Quantization and Normalization to facilitate efficient model parallelism, cleverly partitioning model weights and activations into distinct groups. This partitioning enables independent parameter estimation within each group, minimizing the need for extensive cross-device communication and thus bolstering the model’s scalability and operational efficiency. Moreover, BitNet adopts the straight-through estimator (STE) for backpropagating gradients through non-differentiable functions and employs mixed-precision training. These strategies ensure that gradients and optimizer states are preserved at higher precision, maintaining the model’s training stability and accuracy despite the quantized nature of the weights and activations.

Through rigorous evaluations, BitNet showcases its superior performance and efficiency, outstripping both FP16 Transformers and existing state-of-the-art quantization methods. Notably, BitNet achieves this feat without sacrificing competitive performance, illustrating its capacity to significantly reduce energy consumption and computational overhead. This achievement underscores BitNet’s potential to make high-performance LLMs more accessible and environmentally sustainable, marking a significant milestone in the quest for scalable and efficient AI solutions. As the demand for sustainable AI practices grows, BitNet stands out as a critical advancement, heralding a new era of large language model development.

The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits

Delving into the fourth paper, BitNet b1.58: Scaling 1-bit Transformers for Large Language Models, we find an innovative stride towards advancing the efficiency of LLMs through ternary quantization. This novel approach extends the 1-bit paradigm by introducing a third quantization state, enabling each parameter (or weight) within the LLM to assume one of three possible values: -1, 0, or +1. This subtle yet impactful expansion to ternary quantization not only maintains, but in some aspects enhances, the performance benchmarks set by its binary predecessors and full-precision counterparts, achieving this with significantly reduced computational and energy demands.

At the heart of BitNet b1.58’s methodology is the use of a quantization function that effectively constrains weights to these ternary values. This function scales the weight matrix by its average absolute value before rounding each weight to the nearest integer among -1, 0, and +1, thus achieving a delicate balance between model efficiency and performance. This technique ensures that BitNet b1.58 leverages the computational simplicity of 1-bit operations while introducing the flexibility of a zero state, enhancing model capacity for feature discrimination and reducing the memory and energy footprint of LLM deployments.

What sets BitNet b1.58 apart is not just its quantization innovation but its demonstration of a new scaling law for LLMs that balances performance with cost-effectiveness across various metrics including latency, memory usage, throughput, and energy consumption. This breakthrough signals a shift towards models that can offer high performance while being environmentally and economically sustainable. Furthermore, BitNet b1.58’s adaptation to ternary quantization opens new avenues for hardware optimization, heralding a future where dedicated computing units could further amplify the efficiency and applicability of LLMs in real-world scenarios, spanning from cloud-based applications to edge devices, thereby broadening the horizon for AI’s integration into daily technology solutions.

In conclusion, the exploration of these four pioneering papers sheds light on the remarkable strides being made in the field of Large Language Models (LLMs) quantization, each contributing uniquely to the quest for more efficient, scalable, and accessible AI technologies. From ZeRO’s innovative memory optimization strategies to LoRA’s low-rank adaptation technique, the journey through BitNet’s binary quantization and its evolution into BitNet b1.58’s ternary quantization showcases a consistent drive towards minimizing computational costs while maximizing performance and sustainability.

- ZeRO introduces an approach to eliminate redundancies in model and data parallelism, significantly reducing the memory requirements and enabling the training of models with trillions of parameters on existing hardware configurations.

- LoRA offers a method for adapting pre-trained models with minimal additional parameters, presenting a solution that balances the need for model specificity with the constraints of computational resources, thereby democratizing access to sophisticated AI tools.

- BitNet advances the efficiency frontier with its 1-bit quantization method, drastically lowering the energy and computational demands of LLMs without sacrificing their performance, paving the way for more sustainable AI practices.

- BitNet b1.58 extends this narrative by introducing ternary quantization, further enhancing model efficiency and signaling the potential for specialized hardware optimizations, which could revolutionize the deployment of LLMs across a myriad of platforms and devices.

Together, these papers underscore a pivotal shift in AI research towards models that are not just powerful but also pragmatically deployable in diverse environments. This shift is critical in an era where the environmental, economic, and accessibility implications of technology cannot be overlooked. As we advance, the insights garnered from these studies will undoubtedly play a crucial role in shaping the future of AI development, guiding us towards solutions that are not only technologically advanced but also ethically responsible and broadly accessible. The convergence of these innovative quantization techniques with the evolving needs of society promises to catalyze a new era of AI applications, making the benefits of LLMs universally attainable and marking a significant milestone in our collective journey towards a more inclusive and sustainable future in artificial intelligence.

Thank you for taking the time to explore the forefront of AI with us! 🙌 Your insights and perspectives enrich our journey towards a more innovative and sustainable future. If this exploration into the evolving world of AI and quantization techniques sparked any thoughts or questions, I encourage you to share your views in the comments or spread the word by sharing this post. Together, let’s continue to inspire and drive forward the conversation around the transformative power of technology. Let’s shape the future of AI, one discussion at a time. 💬✨

#AICommunity #ArtificialIntelligence #SustainableTechnology #Innovation #LLMs #Quantization #TechCommunity